Artificial intelligence (AI) is already reshaping how care happens, but it also raises AI healthcare privacy concerns that clinics can’t afford to ignore.

And yes, clinics are worried.

In early 2025, 69% of healthcare providers said they’re concerned AI will make data privacy and security issues worse. Also, 72% of healthcare executives now list data privacy as the top risk in AI adoption.

Here’s the issue: AI tools can’t do their job without access to massive volumes of sensitive patient data. And unless every layer of that system is built with strong safeguards, privacy concerns with AI in healthcare become hard to contain.

So if your clinic is already rolling out AI, or planning to, this isn’t just an IT problem. Beyond that, it can quickly turn into an operational and legal one.

Let’s break down what’s at risk, and what you can do about it.

- TL;DR

- Why AI Needs Access to Sensitive Health Data

- Core Privacy Concerns with AI in Healthcare

- AI in Healthcare: Legal Issues and Regulatory Gaps

- Three Real-World Cases That Sparked Privacy Debates

- Ethical Challenges and Governance Needs

- How to Address Privacy Concerns Proactively

- Bottom Line: AI in Healthcare Can’t Scale Without Privacy

- FAQs

TL;DR

If you need the snapshot version, here it is:

- AI is now a core part of care delivery. 88% of healthcare organizations are already using AI regularly.

- However, data privacy was the #1 concern for healthcare execs in 2025.

- Clinics need AI to work with patient data, but that opens the door to risk.

- Most breaches happen because of missing controls, not tech failures.

- Without a clear governance framework, AI adoption can backfire fast.

- It’s not just about compliance; the matter here is protecting patient trust.

Why AI Needs Access to Sensitive Health Data

AI doesn’t operate in isolation. For it to provide real clinical value, it needs access to the raw material of care: patient histories, lab results, EHRs, insurance records, imaging scans, and everything in between.

Why? Because the more complete the dataset, the better the model performs. Especially when it comes to diagnosis, treatment recommendations, and population health insights.

Here’s what’s happening right now:

- According to a McKinsey report from late 2025, 88% of healthcare organizations report using AI in at least one business function. That’s up from 78% in 2024.

- 85% of healthcare leaders say they’re actively experimenting with or rolling out generative AI tools.

- The FDA has approved 1,247 AI-powered medical devices as of May 2025. Nearly 1,000 of those are used in radiology alone.

That means AI is already deeply integrated into everyday care, from reading chest X-rays to flagging medication errors in the background.

But there’s a catch: This level of performance only works if the AI has constant access to high-quality, up-to-date, deeply personal patient information.

And every time that data moves (from one system to another, or from clinic to vendor), the risk grows.

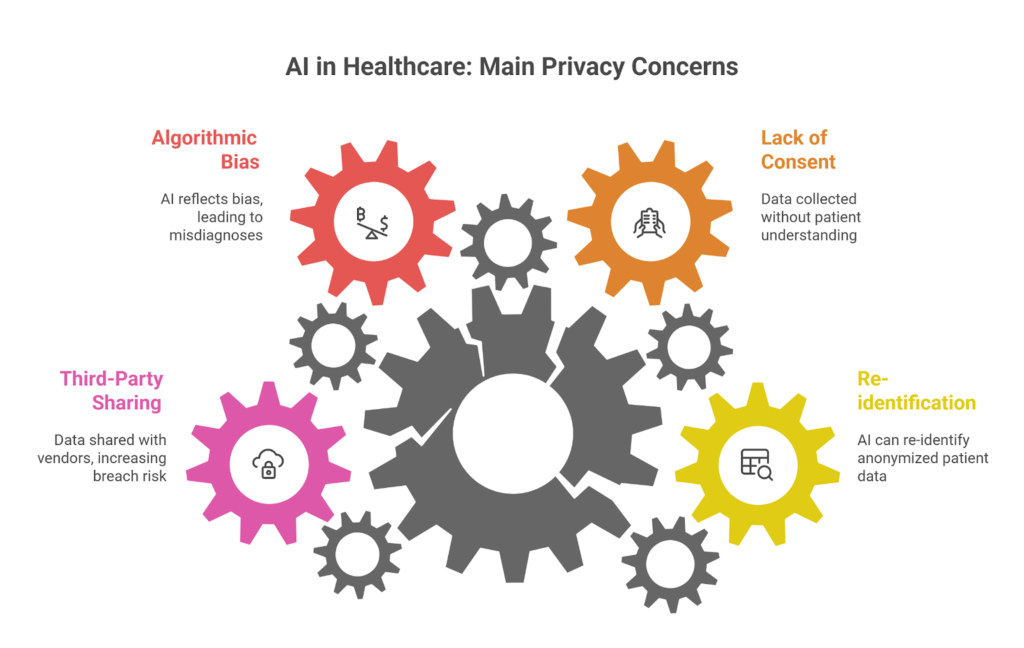

Core Privacy Concerns with AI in Healthcare

This goes beyond storing data safely. The real problem starts when AI systems begin using that data without clear oversight, transparency, or safeguards.

Most clinics don’t realize how easily things can go sideways. One misstep in how information is handled, and you’re looking at a breach, a lawsuit, or worse: losing patient trust.

Here’s where the biggest threats are showing up today:

Re-identification of Anonymized Data

Anonymized doesn’t always mean safe.

AI systems are now powerful enough to cross-reference datasets and re-identify individuals, even when their names or IDs have been removed.

This is especially risky in small or specialized clinics, where the patient population is limited, and the data points (age, condition, zip code) can still lead back to a single person.

And patients are noticing: 37% of Americans think AI will make the security of medical records worse, while just 22% think it will improve it.

Data Collection Without Explicit Consent

Here’s where most clinics get in trouble. They use third-party AI tools that scrape patient data from EHRs, appointments, or portals, without making it 100% clear to the patient what’s being used or why.

Even when consent is technically “given,” it’s often buried in a long onboarding form or lost in fine print. Patients rarely understand what they’re agreeing to—or how far that data can travel.

According to a 2025 survey, 49% of Americans said they’re uncomfortable with doctors using AI tools instead of relying on their own clinical experience.

That discomfort grows when they realize their health info might be used to train algorithms they never approved.

Algorithmic Bias and Discrimination

AI is only as objective as the data it learns from. And healthcare data? It’s full of human bias.

If the training data skews toward one demographic, the model will too. That could lead to uneven predictions, misdiagnoses, or treatment recommendations that simply don’t fit certain patient groups.

For clinics, this means risk on two fronts:

- Clinical accuracy drops for underrepresented populations.

- Legal exposure increases if the tool contributes to discriminatory care.

Bias isn’t always visible on the surface. But its impact can be life-threatening.

Data Sharing with Third Parties

AI in healthcare doesn’t run in a closed system. Vendors, cloud platforms, and integration tools all need access to the model, or to the patient data feeding it.

This opens the door to what’s now being called “Shadow AI”. Those are systems or agents working behind the scenes that clinics might not fully control or even be aware of.

According to IBM, 13% of organizations reported breaches involving AI models or applications. Worse? 97% of those compromised had no access controls in place.

Once the data is out, there’s no easy way to get it back.

Key Insight: In 2025, healthcare remained the most expensive sector for data breaches, averaging $7.42 million per incident. These breaches mostly happened because access and vendor controls were weak.

AI in Healthcare: Legal Issues and Regulatory Gaps

Most privacy rules in healthcare were built for EHRs, not AI. They assume human oversight, static systems, and clear ownership of patient information.

But that’s not how AI works.

Today’s tools can generate content, analyze imaging, make predictions, or even make decisions; often across multiple platforms and vendors. And many of those scenarios fall outside the scope of existing laws.

Here’s where the legal blind spots are most obvious:

HIPAA and Its Limitations

The Health Insurance Portability and Accountability Act (HIPAA), enacted in 1996, is still the main privacy rulebook in U.S. healthcare. It defines how protected health information (PHI) should be handled, shared, and secured.

But HIPAA wasn’t written with AI in mind. It doesn’t directly address:

- The use of generative models in diagnostics.

- Autonomous decision-making by software agents.

- The use of patient data in algorithm training across vendors.

So while clinics may be HIPAA-compliant on paper, their AI systems might still be exposing patient data in ways that fall through the cracks.

And when violations happen, “we followed HIPAA” won’t be a strong defense.

Lack of AI-Specific Healthcare Regulations

There’s still no federal regulation that clearly defines what healthcare AI systems are allowed to do, or how they should be monitored.

That gap has created two big problems:

- Inconsistent practices across vendors, clinics, and states.

- Delayed enforcement, since agencies struggle to keep up with the pace of tech.

Even tools used daily in patient care don’t always go through a robust review.

And when AI systems fail, there’s no standard framework to determine who’s responsible, be it the clinic, the tech provider, or the software itself.

Until those lines are drawn, healthcare providers are left to make judgment calls with high-risk tech and no legal safety net.

Global Regulatory Landscape

Outside the U.S., governments are moving faster:

- Europe’s GDPR already enforces strict rules around consent, data minimization, and the “right to explanation” for automated decisions.

- Canada’s PIPEDA covers patient data privacy, though it’s still catching up with AI-specific issues.

- Countries like Singapore and Australia have published ethical frameworks and national AI strategies, pushing for greater transparency and accountability.

But even globally, there’s no unified standard for how AI should handle sensitive health information.

Three Real-World Cases That Sparked Privacy Debates

Some of the biggest shifts in healthcare AI policy and public opinion came after real-world incidents. Those were breaches, oversights, or vendor failures that exposed just how fragile privacy protections can be.

If you’re leading tech decisions at a clinic or health system, these cases should be on your radar:

1. The “Consent” Debate: Google DeepMind & The NHS

In 2015, Google DeepMind partnered with the Royal Free London NHS Foundation Trust to develop a diagnostic app. The tool, Streams, was built to detect signs of acute kidney injury early.

But to make it work, DeepMind was given access to 1.6 million patient records without asking for explicit patient consent.

That triggered a wave of public backlash. By 2017, the UK’s Information Commissioner’s Office concluded that the NHS had failed to inform patients properly and that the data sharing lacked legal justification.

So, why does this still matter?

Well, it set a precedent. Even life-saving tech doesn’t get a free pass when it bypasses consent. And it raised tough questions for every clinic working with AI vendors:

“Do our patients actually know how their data is being used?”

2. The “Ownership” Debate: Change Healthcare (2024–2025)

This is the largest healthcare data breach in U.S. history, and it’s still unfolding.

In 2024, a ransomware attack hit Change Healthcare, a subsidiary of UnitedHealth. The attackers accessed data from over 100 million Americans, later revised to 190 million by early 2025.

This is why this became a national issue: Critics pointed out that Change processes data for 1 in 3 U.S. patients. That level of centralization means one breach can become a systemic crisis.

It also sparked a major debate about monopoly, infrastructure risk, and AI accountability.

As a direct response, Congress began drafting the Health Infrastructure Security and Accountability Act of 2024 (still under review as of late 2025) to increase oversight for AI-heavy platforms.

2024 Change Healthcare Breach Case Study

3. The Serviceaide “Agentic AI” Exposure (May 2025)

Here’s what modern AI risk looks like in practice.

In May 2025, Serviceaide, a provider of autonomous AI agents for hospitals, suffered a massive breach after a critical security misstep.

One of their systems, a publicly exposed Elasticsearch database, contained records for 483,000 patients from Catholic Health System and stayed unprotected for nearly seven weeks.

The breach happened because Serviceaide’s AI agents had deep backend access to hospital systems like scheduling, IT support, and admin workflows. And a single misconfiguration exposed everything.

This case is a wake-up call about Shadow AI and how easily third-party tools can introduce serious vulnerabilities, often without clinics realizing it.

Serviceaide Data Breach is Part of a Larger Healthcare Trend

Ethical Challenges and Governance Needs

Even when AI systems don’t breach laws, they can still cross ethical lines. And in healthcare, that’s enough to damage trust, disrupt care, or cause real harm.

These are the governance gaps we’re seeing most often:

Lack of Transparency in AI Decision-Making

Most AI tools used in clinical settings still operate as black boxes. You get an output (a recommendation, a score, or a flag) but not a clear explanation of how the system got there.

That’s a problem when:

- The AI contradicts a provider’s judgment

- A patient asks for clarity

- The outcome causes harm

And this is about to get more complex. As also noted in McKinsey’s late 2025 report, 62% of organizations were already experimenting with autonomous “AI agents” that make decisions or manage patient data without constant human oversight.

Without explainability, you lose accountability. And that puts both clinical safety and legal defensibility at risk.

The Need for Responsible AI Frameworks

The tech world loves to talk about “ethical AI.” But in most healthcare settings, those principles haven’t made it off the whiteboard.

Here’s what’s missing:

- Internal review boards for new AI tools

- Standards for fairness, explainability, and patient impact

- Ongoing audits to monitor unintended consequences

Without these elements in place, you’re leaving gaps that patients will notice.

And they already do. According to the 2025 KPMG Trust in AI Global Study, only 41% of people in the U.S. say they trust AI, a number lower than the global average.

That trust won’t improve unless clinics show they’re taking governance seriously.

Role of Ethics Committees and Oversight Bodies

If your clinic already has a privacy officer or security lead, that’s a great start. But, beyond compliance, AI requires multidisciplinary oversight.

Ethics committees should include:

- Clinical staff

- Legal advisors

- IT leadership

- Patient advocates

This is about making sure your AI tools actually support patient care, without crossing lines that affect trust, safety, or equity.

When ethics are treated as a box to check after implementation, things go wrong fast.

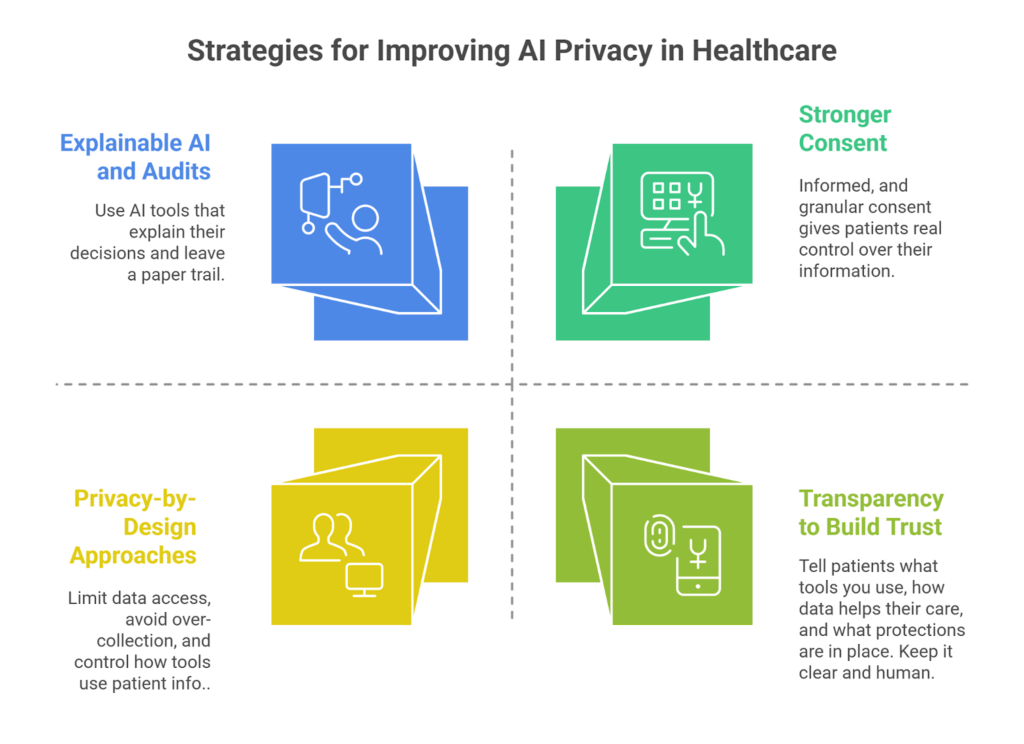

How to Address Privacy Concerns Proactively

You can’t eliminate all privacy risks. But you can get ahead of them.

Most of the damage we’ve seen so far (breaches, consent issues, patient backlash) wasn’t caused by the AI itself. It came from poor planning, rushed implementation, or lack of internal guardrails.

Here’s what works when it comes to protecting patient data before things go wrong:

Privacy-by-Design Approaches

Security shouldn’t be something you patch on at the end. Instead, it needs to be part of the plan from day one, starting with how you choose vendors and structure AI workflows.

That means:

- Choosing models that minimize unnecessary data exposure.

- Avoiding data hoarding just to “train better algorithms”.

- Making sure every AI workflow has clear limits on what it can access, when, and why.

Privacy by design helps you move fast without losing control.

Strengthening Consent Mechanisms

One-time consent isn’t enough anymore. AI tools evolve fast, and patients deserve to know when their information is being used in new ways.

Clinics should rethink how consent works, and make it:

- Dynamic: Easy to update as care or tools change.

- Informed: Written in clear language that patients can actually understand.

- Granular: Specific to types of data or use cases, not just blanket approval.

The goal here is clear: to give patients real agency over their data.

Investing in Explainable AI and Audits

If you’re using an AI tool to guide care, you should be able to explain how it works. Not in technical terms, but in a way that makes sense to patients and clinicians.

Invest in systems that include:

- Built-in explainability features

- Clear documentation of data inputs

- The ability to audit decisions after the fact

When something goes wrong (and it will), transparency gives you a fighting chance to fix it, learn from it, and maintain trust.

Building Patient Trust Through Transparency

Trust is built through consistent, honest communication. So, let patients know:

- What tools does your clinic use.

- How their data supports care.

- What protections are in place.

- Who to contact with questions or concerns.

This doesn’t need to be a full lecture on AI governance. Even a short email, EHR notice, or front-desk explanation can go a long way in showing patients you respect their privacy.

Bottom Line: AI in Healthcare Can’t Scale Without Privacy

The message is clear: AI won’t work in healthcare unless privacy is treated as a core part of the strategy.

Yes, the potential is huge. But none of that matters if patients don’t trust how their data is being used. And with AI touching everything from radiology to revenue cycle management, the risk surface is only growing.

The good news? You don’t need to tackle this alone. At Medical Flow, we help clinics implement AI and virtual care systems that are built to scale. All without compromising compliance, patient trust, or operational sanity.

If your clinic is thinking about rolling out AI, or fixing what’s already live, let’s talk.

FAQs

What are the main privacy concerns with using AI in healthcare?

The biggest one? Patient data is being used in ways people never agreed to. Many AI applications and tools pull info from EHRs or other systems without making it clear who’s seeing what.

There’s also the risk of decisions being made by black-box systems that no one can fully explain. And if a breach happens, it’s expensive and public.

How does AI impact patient data privacy in healthcare settings?

AI needs a lot of data to work well. That means your patients’ sensitive data moves between tools, vendors, and platforms constantly. If even one part of that chain isn’t secure, it puts the whole system and patient privacy at risk.

Why is privacy a critical issue when implementing AI technologies in healthcare?

Because once trust is broken, it’s hard to rebuild. Patients expect their data to stay private and be handled with care. If a clinic rolls out AI without clear policies, guardrails, or security measures, they’re gambling with something that goes way beyond compliance.

How do HIPAA regulations affect the deployment of AI tools in healthcare?

HIPAA is still the law of the land in the U.S., but it wasn’t written for AI. It doesn’t fully cover how these new tools use data, especially when vendors, cloud platforms, or autonomous systems are involved.

So yes, you might be HIPAA-compliant and still failing to ensure data security across your full stack.

What are the risks of data breaches when using AI in healthcare, and how can they be mitigated?

If your AI system connects to the wrong vendor, lacks proper access controls, or stores data without the right protections, a breach is just a matter of time. And in healthcare, breaches are costly.

The fix? Prioritize data protection from day one. Don’t wait until something breaks to lock things down.

What are the potential benefits of AI in healthcare despite privacy concerns?

When it’s handled right, AI can be a game-changer. It can speed up diagnosis, reduce admin work, and help doctors focus on patient care. But none of that matters if people don’t trust the system.