AI has already taken hold in healthcare. Doctors rely on it to analyze scans, flag risks, and sort patient records.

According to a 2025 report by Offcall, two out of three doctors use AI every single day. And almost 90% tap into it at least once a week.

But here’s the catch: 81% say they’re frustrated with how their hospitals are using it.

The issue is how the tech is being rolled out. Most tools work like black boxes: they spit out a result, but leave you with zero insight into why. In a clinical setting, that’s dangerous.

Explainable AI in healthcare is the fix for this. It’s what lets teams see what the model’s doing, understand why it made a call, and know when to step in.

Next, we’ll break down how explainable AI (XAI) works, where it fits in day-to-day care, and why it’s become non-negotiable for any modern healthcare system.

Let’s begin.

- TL;DR

- What Is Explainable AI?

- The Limits of Black-Box AI in Medical Settings

- What Makes an AI System Explainable in Healthcare

- How Explainable AI Works in Practice

- Where Explainable AI Is Used in Healthcare

- Explainable AI and Healthcare Regulation

- What Healthcare Organizations Gain From Explainable AI

- How to Assess Explainable AI Solutions

- Bottom Line: Explainability Is the Starting Line

- FAQs

TL;DR

Short on time? Here’s a quick overview:

- Explainable AI in healthcare helps teams understand and validate model decisions.

- Black-box systems create trust gaps, compliance issues, and safety risks.

- Explainability isn’t all-or-nothing; it ranges from basic insights to full traceability.

- Real use cases include diagnostics, risk alerts, clinical decision support, and personalized care.

- Most clinical AI studies now use XAI methods like SHAP and Grad-CAM.

- The bottom line: If you can’t explain it, you can’t safely use it.

What Is Explainable AI?

Explainable artificial intelligence (XAI) is exactly what it sounds like: AI that can show how it got to a decision.

It doesn’t just give you an answer. Actually, it highlights what data it used, what patterns it saw, and what made it choose one outcome over another.

That way, people can understand what the system did, check if it makes sense, and decide what to do with it.

In healthcare and other high-stakes areas, having that clarity is the only way to use AI safely.

Using Explainable AI to Improve Healthcare

Why Healthcare Needs Explainable AI

That need for clarity becomes even more urgent in clinical settings.

If your team can’t see how a recommendation was made, they can’t assess how to follow it. That puts clinicians in a tough spot: either take the output at face value or ignore it completely.

Explainable AI gives them a third option: context. They can see what drove the model’s decision and act accordingly, without hesitation or blind acceptance.

And it’s not just a theoretical benefit:

- 58% of healthcare organizations remain concerned about overreliance on AI, especially for diagnosis, according to Vention Teams.

- In a clinical review, 50% of studies found that explainability increased clinician trust compared to standard black-box systems.

Trust improves when the system makes sense. And that’s exactly what most AI tools are still missing.

The Limits of Black-Box AI in Medical Settings

Black-box models don’t work for clinical teams. They offer results, but leave out the thinking behind them.

When your team gets a result with no explanation, they lose control of the decision. And when something goes wrong, they can’t justify the outcome to a patient, a supervisor, or themselves.

These are the problems that show up fast:

- No visibility into how decisions are made. You can’t explain what you don’t see.

- Hidden bias in the training data, and no way to catch it before it affects care.

- No room to push back. If the model’s wrong, there’s no clear way to say why.

- Resistance from staff. Because if they can’t trust the system, they won’t use it.

And here’s the bigger issue: these tools are already in use, without enough oversight.

As noted in a study from the University of Minnesota, 65% of U.S. hospitals use predictive AI tools, but less than half review them for bias. That’s not sustainable.

Explainable AI changes the dynamic. It gives your staff a way to review, understand, and take action based on what the model is doing, not just what it says.

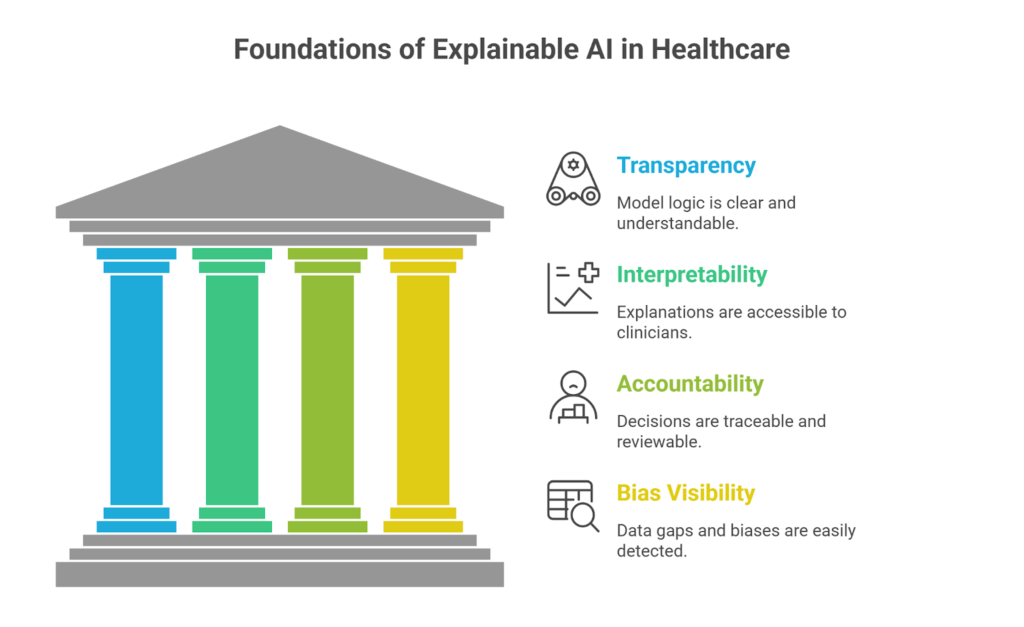

What Makes an AI System Explainable in Healthcare

Not every AI system is explainable. And not every explanation is actually useful. For clinical teams, it’s not enough to “open the model.” You need clarity that makes sense in context.

Here’s what separates explainable AI from the rest:

Transparency in Model Logic

The model needs to show its reasoning. Not just the outcome, but why it prioritized one result over another. When teams can follow the logic, they’re more likely to act on it and spot issues before they escalate.

Human Interpretability

If the explanation only makes sense to the data team, it’s not helping your clinicians.

The system should break things down in a way doctors can actually use. No complex jargon, just clear reasoning tied to patient data.

Accountability and Traceability

When AI is involved in care, someone has to be able to answer for it.

Explainable systems help clarify who’s responsible for what, especially when human decisions are informed (but not dictated) by machine input.

Bias Visibility

Bias isn’t always obvious. Explainable AI helps surface blind spots in the data, like when certain demographics are over or underrepresented in predictions. The earlier your team sees this, the faster they can address it.

How Explainable AI Works in Practice

So how does this actually play out in real-world tools?

The short answer: explainable AI depends on both the model and the interface. Here’s what that looks like in practice:

- Use of interpretable models from the start. Some systems are built for clarity, even if it means sacrificing a bit of performance. That trade-off can be worth it when your team needs fast decisions they can actually understand.

- Feature importance and attribution. The model highlights which data points influenced a specific prediction. That could be vital signs, lab results, or comorbidities; whatever tipped the scale. Your team sees exactly what mattered.

- Patient-level explanations. Instead of broad metrics, the system explains this result for this patient. That makes it easier to double-check recommendations or tailor care on the spot.

- Visual breakdowns clinicians can act on. Tools like heatmaps, dashboards, or highlighted text let your team scan and understand results fast. These visual cues support clinical reasoning under pressure.

And this isn’t just theory. Right now, over 80% of clinical decision support studies use XAI methods like SHAP and Grad-CAM to make AI outputs interpretable and useful in real time.

Where Explainable AI Is Used in Healthcare

XAI is already being used in day-to-day care. And when it’s done right, it gives your team the confidence to act fast, because they know what the model is doing and why.

Here’s where it’s making the biggest impact:

- Clinical decision support. The model suggests a diagnosis or treatment. Your staff sees which data pushed it there. They don’t have to guess or blindly trust it: instead, they can verify and move.

- Risk alerts and early warnings. A system flags deterioration or a readmission risk. With XAI, it also shows what triggered that alert. That context helps your team respond the right way, not just react.

- Personalized care plans. Instead of generic outputs, explainable tools show why one option fits better than another, based on that patient’s data. That makes personalization easier to trust and defend.

- Medical imaging and diagnostics. When AI reviews a scan, your team needs to see what the model focused on, and what features or areas in the image tipped the decision one way or the other. With explainability in place, that context is right there.

Key Stat: In radiology, this level of explainability has already become standard. According to Vention Teams, by May 2025, 76% of all FDA-approved AI medical devices were in radiology, with most including built-in explainability features.

Explainable AI and Healthcare Regulation

AI without visibility is a compliance risk. And if your tools can’t explain their outputs, you’re left filling in the gaps when things go wrong.

Here’s how explainable AI keeps things tighter across clinical, legal, and operational lines:

- Makes regulatory alignment easier. Standards like HIPAA, GDPR, and internal review boards all expect decisions to be traceable. With explainability built in, teams can show how each call was made.

- Simplifies documentation and reviews. Clear logic paths make audits faster and quality reviews less painful. Teams spend less time trying to piece together what happened and more time improving what matters.

- Adds a layer of legal and operational safety. When outcomes are challenged, explainable models help reveal where things went wrong. That visibility reduces liability and speeds up internal responses.

If AI is playing a role in clinical care, your team needs more than results. They need a way to stand behind them.

What Healthcare Organizations Gain From Explainable AI

You can have the most advanced model in the world. But if your team doesn’t trust it, it won’t move the needle. And XAI solves that.

Here’s what clinics and healthcare teams actually gain when they put explainability first:

- Higher adoption and trust across clinical staff. When clinicians understand how a system works, they’re more likely to use it and use it well.

- Stronger collaboration between clinical and technical teams. When everyone can see how the model works, it’s easier to align. No need to translate between roles; both sides can focus on solving the same problem.

- More defensible decisions. Recommendations backed by explainability are easier to justify to patients, boards, or regulators. No scrambling to explain how or why a call was made.

- Scalable AI systems that won’t backfire later. A tool that your team doesn’t trust can’t grow with your organization. Explainable systems avoid that wall early, so you can build on solid ground.

Beyond avoiding risk, this is about making AI actually usable and worth the investment.

How Does Explainable AI Improve Medical Diagnosis? – Everyday Bioethics Expert

How to Assess Explainable AI Solutions

Not all tools labeled “explainable” are actually helpful in practice. Some flood you with charts that no one knows how to read. Others show too little, or hide critical reasoning behind technical jargon.

So before bringing any AI system into your clinic, it’s worth pressure-testing a few key points:

- Clinical usability: Can your staff understand the explanation without calling in the data team? If it only makes sense to engineers, it won’t hold up in real workflows.

- Clarity vs. performance: Some systems prioritize transparency over raw accuracy, and that trade-off can be a good thing. But your team should know where that balance lands before you commit.

- Workflow integration: The best explanations mean nothing if they’re buried in a platform no one wants to use. Look for tools that fit into existing systems, not ones that force you to rebuild everything.

- Scalability and maintenance: Will those explanations still make sense six months in, when your data shifts or your team expands use cases? A system that can’t evolve won’t last.

If a vendor can’t walk you through how their model makes decisions, it’s not ready for your care environment.

Bottom Line: Explainability Is the Starting Line

If the AI can’t explain itself, your team can’t trust it. And without trust, adoption never takes off, no matter how good the model is.

Explainability is what makes the system usable in real care settings. It gives your clinicians the context they need to make confident, defensible decisions.

At Medical Flow, we work with clinics to choose and implement AI tools that offer real support. If your team is evaluating options, we can help you focus on what actually makes them safe to use.

Let’s talk and make explainability part of your AI strategy from day one.

FAQs

What is explainable AI, and why does it matter in healthcare?

Explainable AI shows how an AI model reached a decision. It highlights the inputs, patterns, and logic behind the result. That way, your team can understand it, check if it holds up, and act with confidence.

How is explainable AI different from regular AI?

Regular models give you a result. Meanwhile, explainable AI shows how it got there. That added visibility makes these models in healthcare safer to use and easier to trust in clinical settings.

What tools or methods are used to make AI explainable?

The most common are SHAP and Grad-CAM. SHAP shows which variables mattered most. Meanwhile, Grad-CAM highlights areas in image data that influenced the result. These tools help your team see what the model saw.

How does explainable AI help with diagnosis and treatment?

It gives clinicians more than an answer; it provides reasoning. They can review how the system interpreted patient data, understand the model predictions, and decide when to rely on it or when to take a different path.

Where is explainable AI already being used?

You’ll find it in clinical decision support systems, diagnostics, early warnings, and personalized healthcare applications. Radiology leads the way, but adoption is growing across care workflows.

Why do clinicians need AI to be explainable?

Because they’re still accountable for the outcome. If a predictive model makes a bad call, your team needs to understand why it happened and how to prevent it. Explainability gives them that visibility.